NEW: v2.5.1 — Now on PyPI! pip install langchain-superlocalmemory & pip install llama-index-storage-chat-store-superlocalmemory See what's new

Your AI Finally Remembers You

Stop re-explaining your codebase every session. 100% local. Zero setup. Completely free.

Install in One Command

npm install -g superlocalmemoryAuto-detects and configures 17+ AI tools including ChatGPT. Requires Node.js 14+ and Python 3.8+.

npm update -g superlocalmemorynpm install -g superlocalmemory@latestOr download platform-specific installer

Or install from source (all platforms)

git clone https://github.com/varun369/SuperLocalMemoryV2.git

cd SuperLocalMemoryV2

./install.sh # Mac/Linux

# Or: .\install.ps1 # WindowsFramework Integrations

Use SuperLocalMemory as a memory backend in your LangChain and LlamaIndex applications

pip install langchain-superlocalmemoryfrom langchain_superlocalmemory import SuperLocalMemoryChatMessageHistory

history = SuperLocalMemoryChatMessageHistory(

session_id="my-session"

)

# Messages persist locally across sessionspip install llama-index-storage-chat-store-superlocalmemoryfrom llama_index.storage.chat_store.superlocalmemory import SuperLocalMemoryChatStore

chat_store = SuperLocalMemoryChatStore()

# Local SQLite backend, zero cloudBoth packages are 100% local — no cloud calls, no API keys for the memory layer. Your data stays on your machine.

See It In Action

Visual walkthrough of key features — auto-rotating every 4 seconds

The Problem

You: "Remember that authentication bug we fixed last week?"

Claude: "I don't have access to previous conversations..."

You: *sighs and explains everything again*

AI assistants forget everything between sessions. You waste time re-explaining your project architecture, coding preferences, previous decisions, and debugging history.

The Solution

# Save a memory

superlocalmemoryv2:remember "Fixed auth bug - JWT tokens were expiring too fast, increased to 24h"

# Later, in a new session...

superlocalmemoryv2:recall "auth bug"

# ✓ Found: "Fixed auth bug - JWT tokens were expiring too fast, increased to 24h"Your AI now remembers everything. Forever. Locally. For free.

Features in Action

See how SuperLocalMemory transforms your AI workflow with real-time intelligence

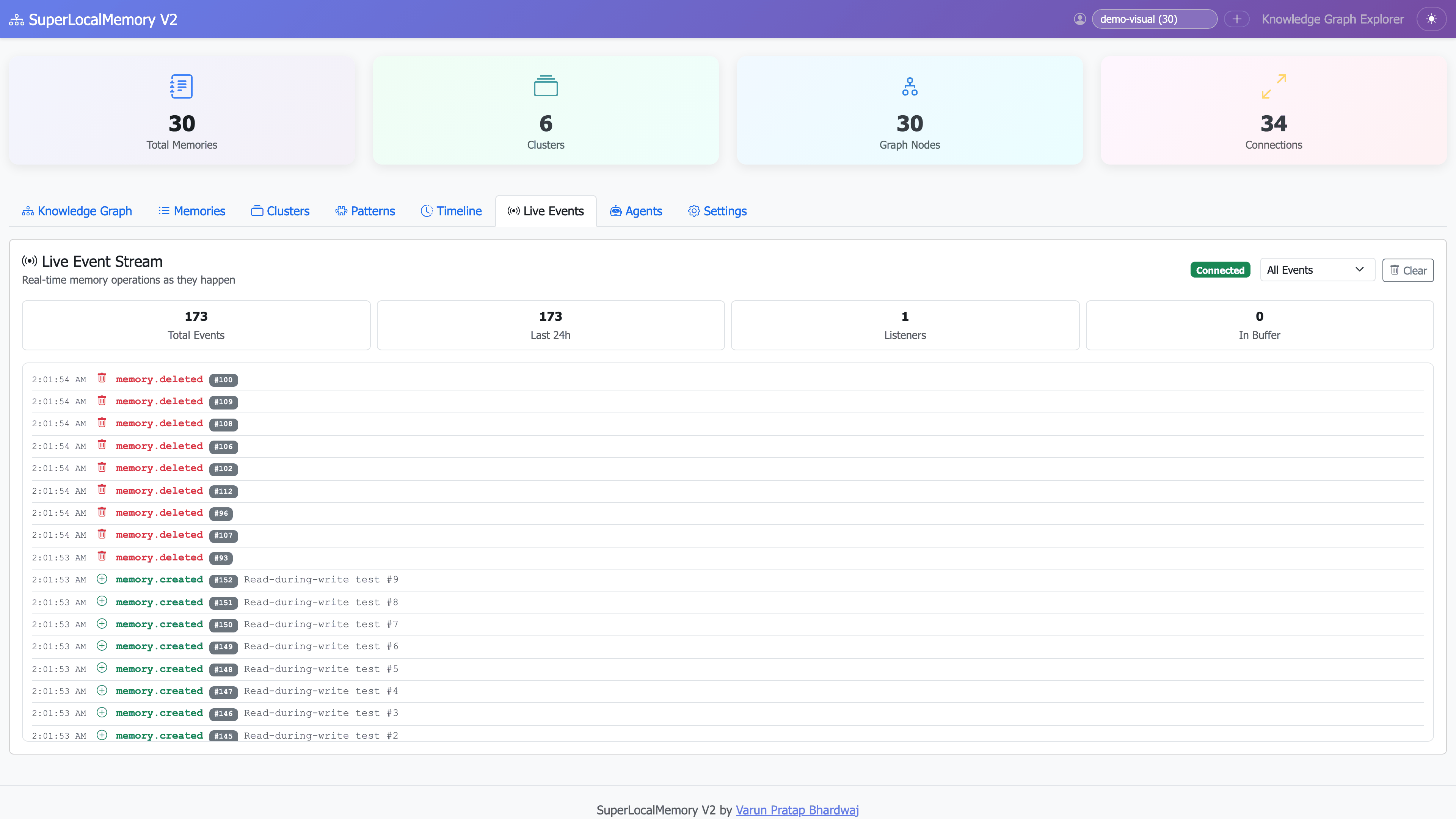

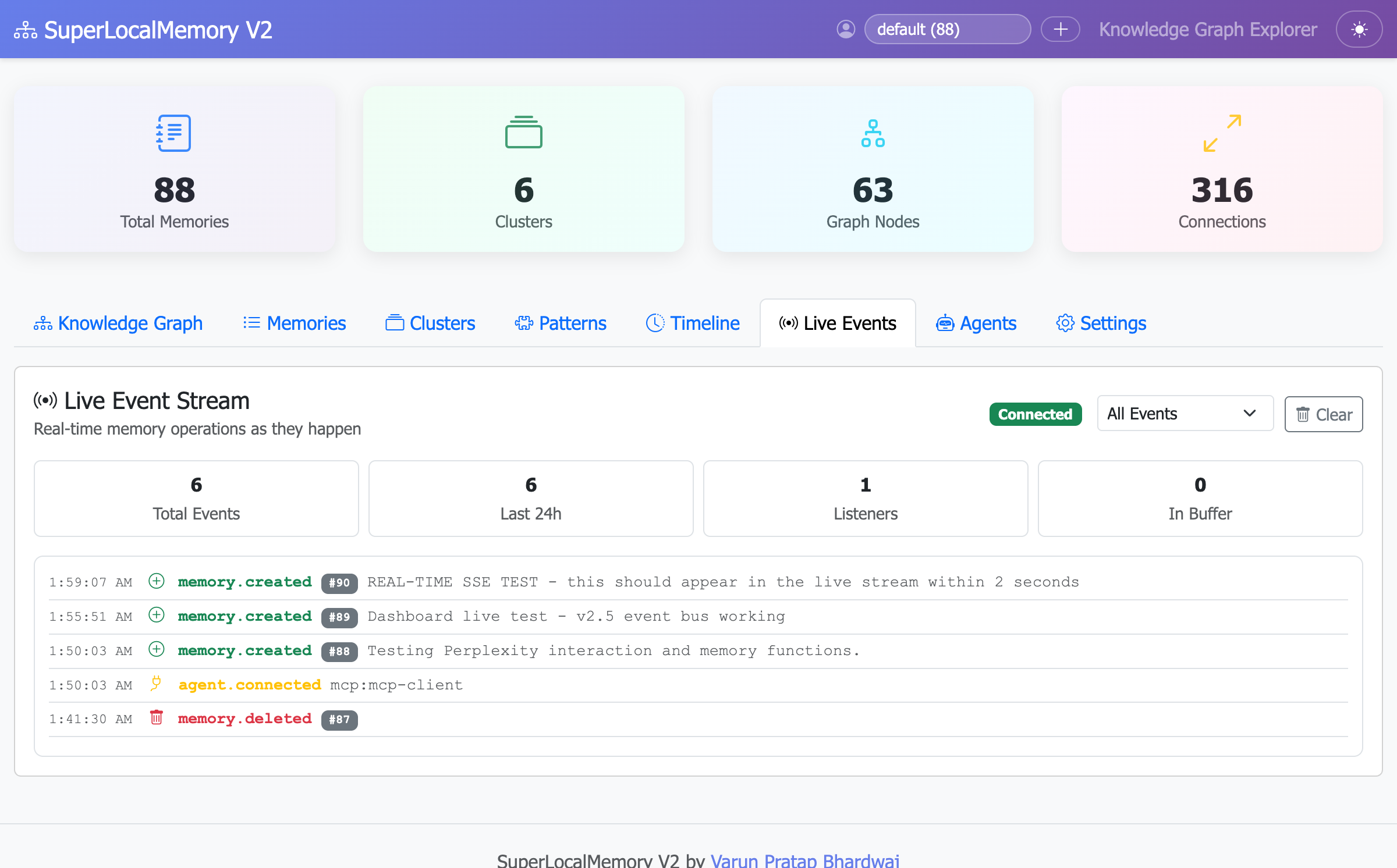

Real-Time Event Stream

Watch your AI's memory in real-time. Every save, search, and recall appears instantly in the event stream. Cross-process visibility — CLI, MCP from Claude, MCP from Cursor, REST API — all visible in one unified stream with zero refresh.

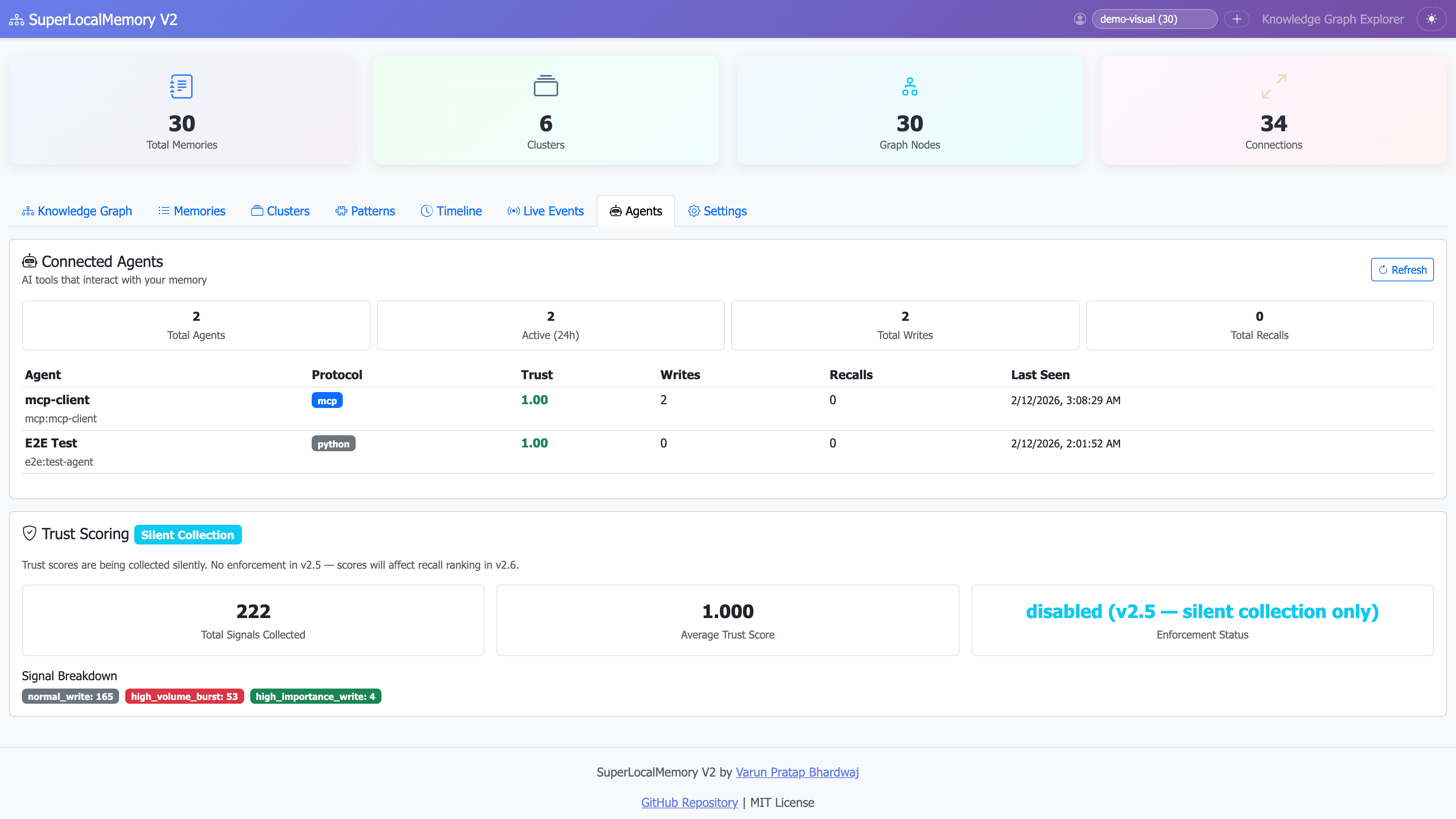

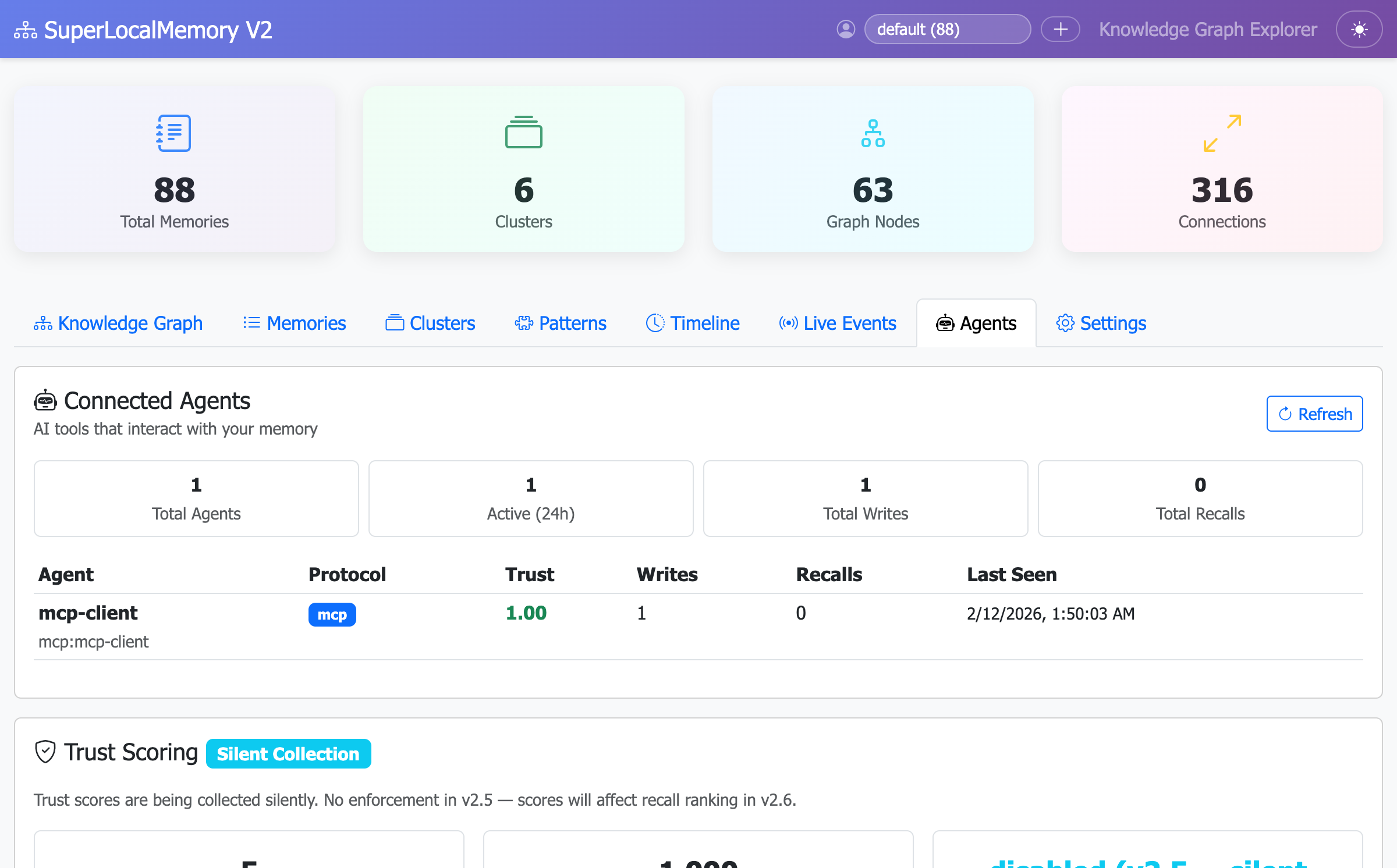

Agent Trust Tracking

Track which AI tools connect to your memory. See trust scores, protocols (MCP/A2A), write counts, and last-seen timestamps. Bayesian confidence scoring detects quality. Cross-agent validation boosts trust. Full audit trail for enterprise compliance.

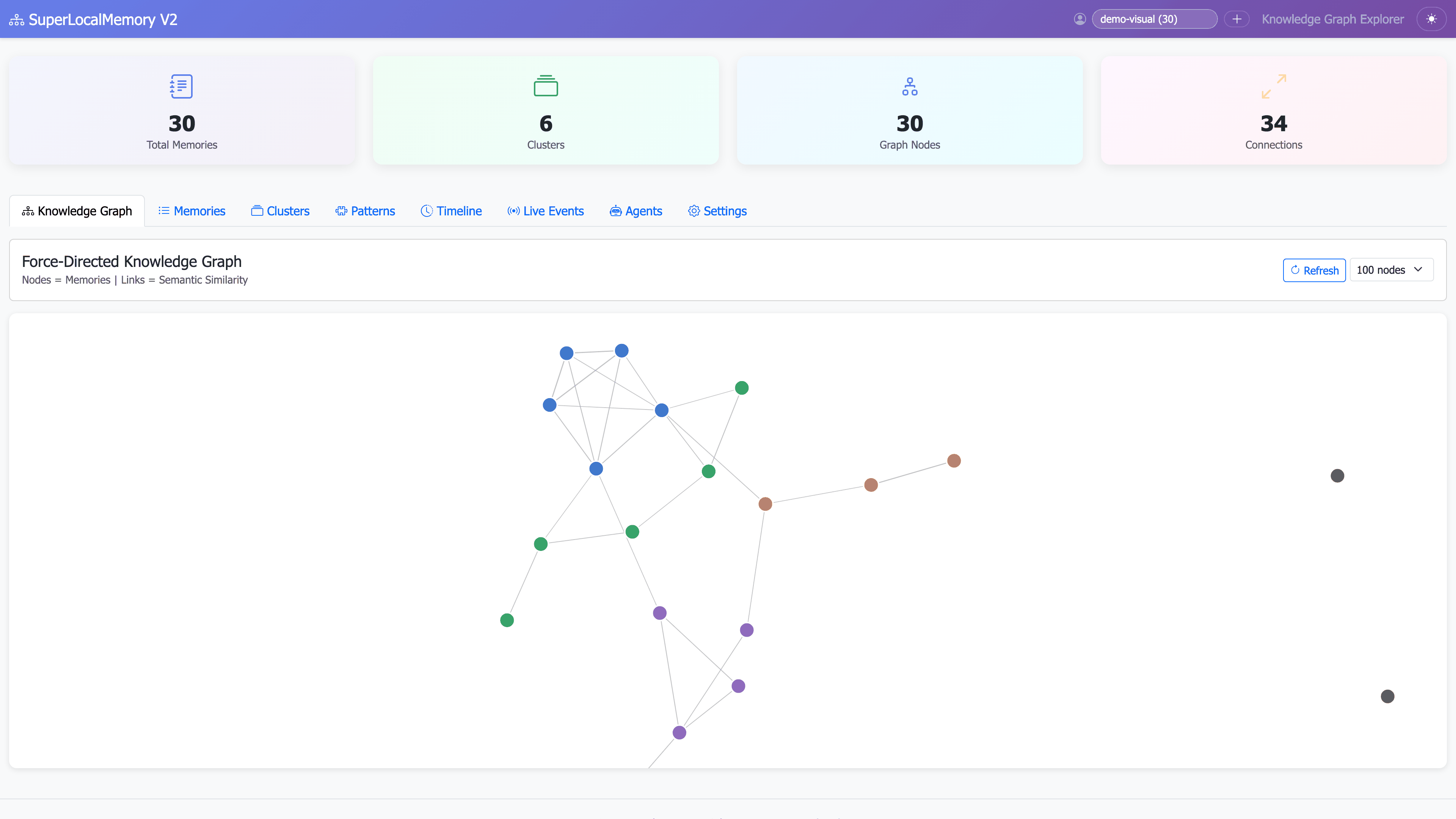

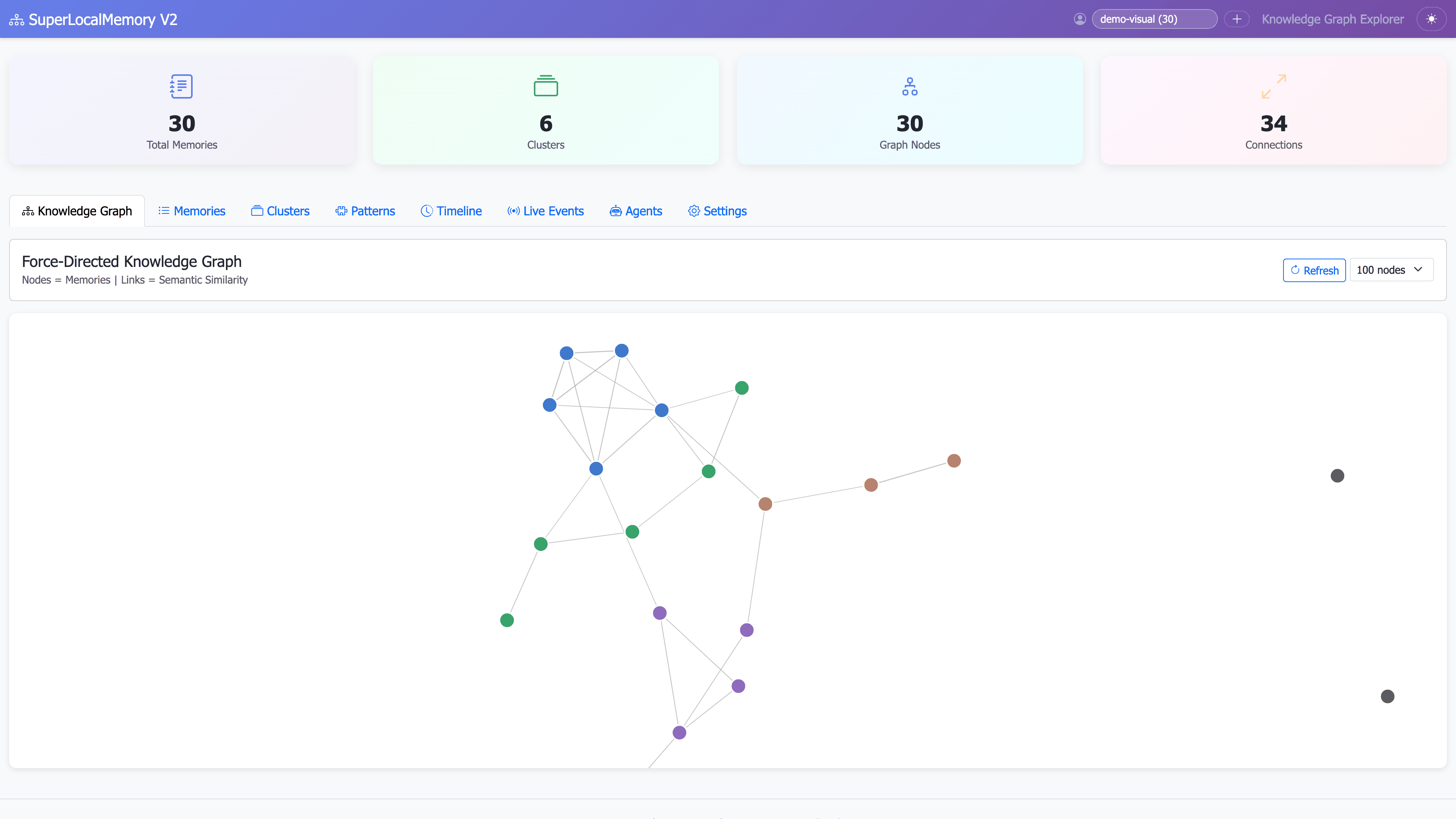

Knowledge Graph Discovery

Discover hidden connections. Your memories automatically cluster by topic using Leiden clustering, revealing insights you didn't know existed. TF-IDF entity extraction + hierarchical communities. Microsoft GraphRAG adapted for local-first operation.

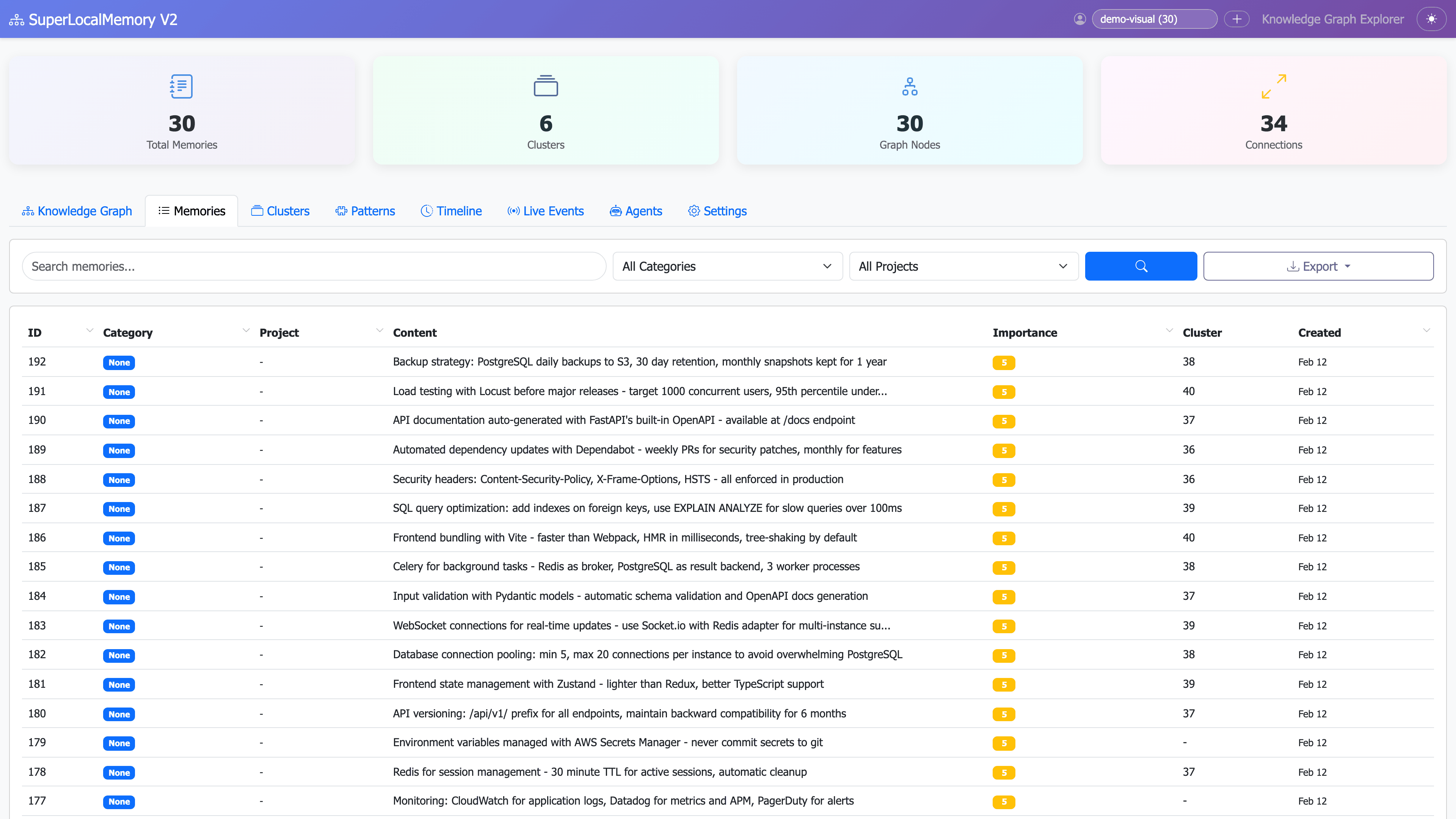

Hybrid Search That Never Misses

Three search engines in one: TF-IDF semantic search + SQLite FTS5 full-text + knowledge graph traversal. 80ms average. Never miss a memory. A-RAG architecture adapted for local operation with zero API calls.

Simple CLI, Universal Access

One command to remember, one command to recall. Works everywhere: terminal, scripts, cron jobs, CI/CD pipelines. No configuration needed. Tab completion for bash and zsh.

Or use MCP integration for natural language access from Claude, Cursor, Windsurf, and 17+ AI tools.

v2.5 — Your AI Memory Has a Heartbeat

From passive storage to active coordination layer

Real-Time Event Stream

Every memory operation fires a live event to the dashboard via SSE. No refresh needed. Cross-process — CLI, MCP, REST all visible in one stream.

Zero Database Locks

WAL mode + serialized write queue. 50 agents writing simultaneously? Zero "database locked" errors. Reads never block during writes.

Agent Tracking

Know exactly which AI tool wrote what. Claude, Cursor, Windsurf, Perplexity — all auto-registered with protocol, write counts, and last-seen timestamps.

Trust Scoring

Bayesian trust signals detect quality. Cross-agent validation boosts trust. Spam and quick-deletes lower it. Silent collection now — enforcement in v2.6.

Memory Provenance

Every memory records its creator, protocol, trust score, and derivation lineage. Full audit trail for enterprise compliance.

Production-Grade Code

28 API endpoints across 8 modular routes. 13 modular JS files. 63 pytest tests covering concurrency, security, edge cases.

Why SuperLocalMemory?

🔐 100% Local

Your data never leaves your machine. Zero cloud dependencies. GDPR/HIPAA compliant by default.

💰 100% Free

No usage limits. No credit systems. No subscriptions. Free forever. Unlike Mem0 ($50/mo) or Zep ($50/mo).

🌐 Works Everywhere

17+ AI tools supported: ChatGPT, Claude Code, Cursor, Windsurf, VS Code, Aider, Codex, Gemini CLI, JetBrains, and more. One database, all tools.

🧠 Knowledge Graph

Auto-discovers relationships between memories. Finds connections you didn't know existed.

🎯 Pattern Learning

Learns your coding style, preferences, and terminology. AI matches your style automatically.

⚡ Lightning Fast

80ms hybrid search. Sub-second graph builds. Tested up to 10,000 memories with linear scaling.

Universal Integration

SuperLocalMemory V2 is the ONLY memory system with dual-protocol support: MCP (agent-to-tool) and A2A (agent-to-agent).

| Tool | Integration | How It Works |

|---|---|---|

| Claude Code | ✅ Native Skills | /superlocalmemoryv2:remember |

| Cursor | ✅ MCP Integration | AI automatically uses memory tools |

| Windsurf | ✅ MCP Integration | Native memory access |

| Claude Desktop | ✅ MCP Integration | Built-in support |

| VS Code + Continue | ✅ MCP + Skills | /slm-remember or AI tools |

| VS Code + Cody | ✅ Custom Commands | /slm-remember commands |

| Aider | ✅ Smart Wrapper | aider-smart with auto-context |

| ChatGPT | ✅ MCP Connector | search() + fetch() via OpenAI spec |

| Any Terminal | ✅ Universal CLI | slm remember "content" |

All three methods use the SAME local database. No data duplication, no conflicts.

vs Alternatives

| Feature | Mem0 | Zep | Personal.AI | SuperLocalMemory V2 |

|---|---|---|---|---|

| Price | Usage-based | $50/mo | $33/mo | $0 forever |

| Local-First | ❌ Cloud | ❌ Cloud | ❌ Cloud | ✅ 100% |

| IDE Support | Limited | 1-2 | None | ✅ 17+ IDEs |

| MCP Integration | ❌ | ❌ | ❌ | ✅ Native |

| A2A Protocol | ❌ | ❌ | ❌ | 🔜 v2.5.0 |

| Pattern Learning | ❌ | ❌ | Partial | ✅ Full |

| Knowledge Graphs | ✅ | ✅ | ❌ | ✅ Leiden Clustering |

| Zero Setup | ❌ | ❌ | ❌ | ✅ 5-min install |

Performance That Scales

Benchmarks (v2.2.0)

| Add Memory | < 10ms |

| Hybrid Search | 80ms |

| Graph Build | < 2s (100 memories) |

| Pattern Learning | < 2s |

Scalability

| 100 memories | 35ms search, 30MB RAM |

| 500 memories | 45ms search, 50MB RAM |

| 1,000 memories | 55ms search, 80MB RAM |

| 5,000 memories | 85ms search, 150MB RAM |

Tested up to 10,000 memories with linear scaling and no degradation.

Built on 2026 Research

Not another simple key-value store. SuperLocalMemory implements cutting-edge memory architecture.

📄 PageIndex (VectifyAI)

Hierarchical memory organization with tree structures and breadcrumbs for O(log n) lookups.

🕸️ GraphRAG (Microsoft)

Knowledge graph with Leiden clustering. Auto-discovers relationships and communities.

🎯 MemoryBank (AAAI 2024)

Identity pattern learning. Understands your coding style, preferences, and terminology.

⚡ A-RAG

Multi-level retrieval with context awareness. Hybrid search combining semantic, FTS5, and graph.

🤝 A2A Protocol (Google/LF)

Agent-to-Agent collaboration. AI agents discover each other and share memory context in real-time. Coming v2.5.0.

The only open-source memory system with both MCP + A2A protocol support.

Ready to Give Your AI a Memory?

Install in 5 minutes. Free forever. Works with 17+ IDEs.

Created by Varun Pratap Bhardwaj